A 4U AI server with a high performance/cost ratio, supporting multiple configurations of 8/16 GPUs of various types, and suitable for application scenarios such as model inference tasks, video encoding and decoding, etc.

A 4U AI server with high compatibility, supporting 1 to 8 PCIe datacenter GPUs and building an intelligent computing base adaptable to all scenarios.

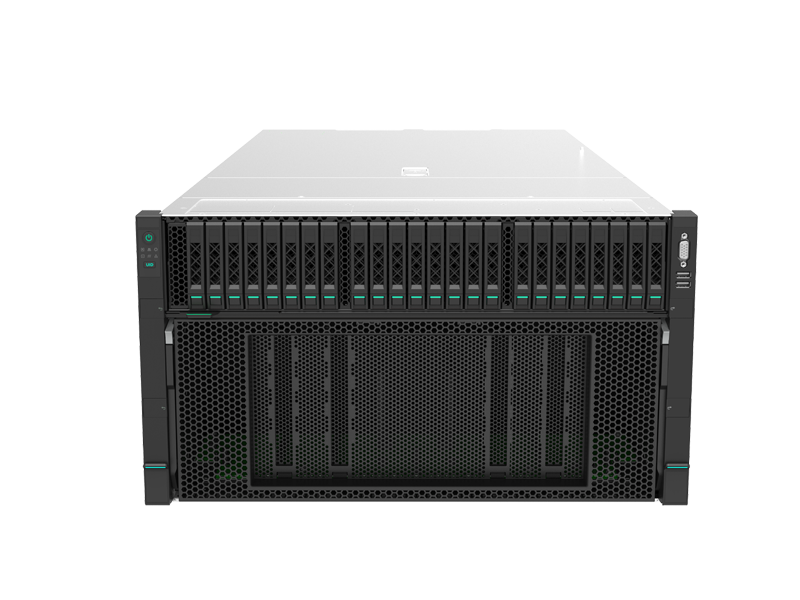

A 6U AI server with high scalability, compatible with 8 high-performance OAMs, supporting AI workloads such as large model training, fine-tuning, inference, and recommendation.

A 6U high-performance AI server, equipped with 8 fully interconnected, high-bandwidth GPUs, enabling large model inference with hundreds of billions of parameters on a single server, to accelerate the intelligent transformation of businesses.